On October 16, at the 2018 XDF Xilinx Developer Conference held in Beijing, Inspur and Xilinx announced the launch of the world’s first FPGA AI accelerator card with integrated HBM2 cache, F37X, which can provide power consumption of less than 75W for typical applications 28.1TOPS' INT8 computing performance and 460GB/s ultra-high data bandwidth are suitable for various applications such as machine learning inference, video transcoding, image recognition, speech recognition, natural language processing, genome sequencing analysis, NFV, big data analysis and query Scenarios to achieve high-performance, high-bandwidth, low-latency, and low-power AI computing acceleration.

Inspur Group Vice President Li Jin delivered a keynote speech at the 2018 XDF Xilinx Developer Conference

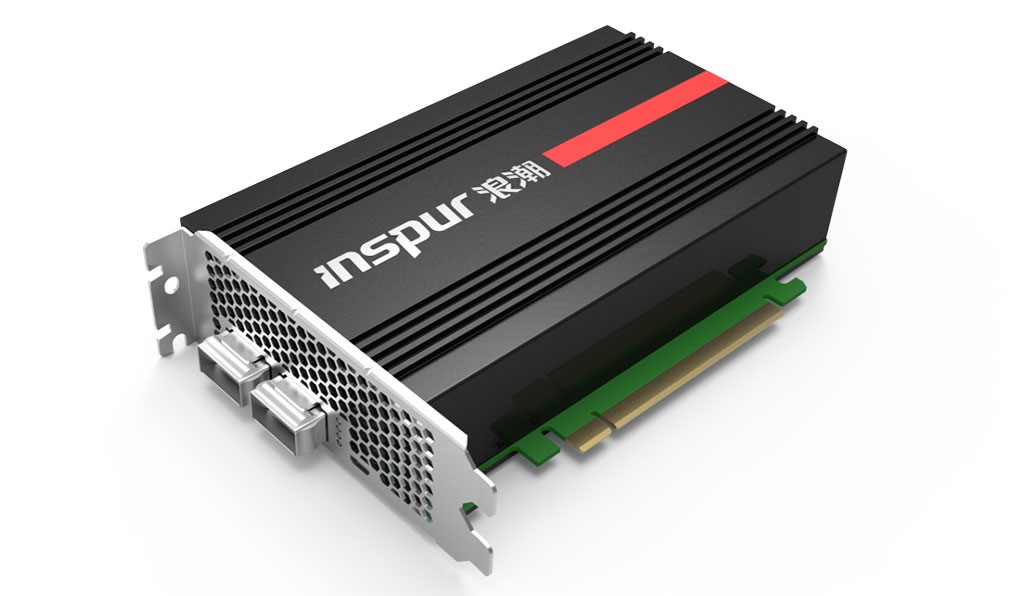

F37X is a cutting-edge FPGA accelerator card specially designed for AI extreme performance by Inspur. It adopts Xilinx Virtex UltraScale+ architecture, provides 2.85 million system logic units and 9024 DSP units, INT8 computing performance has reached 28.1TOPS, integrated 8GB HBM2 cache, bandwidth Up to 460GB/s, which is 20 times higher than single DDR4 DIMM. When the size of the AI ​​calculation model is smaller than the HBM2 capacity, it can all be pre-loaded into the cache, eliminating the data transmission delay caused by external reading and writing and increasing the processing speed, making it possible to completely put the AI ​​calculation on the chip. Performance data shows that Inspur F37X is based on the GoogLeNet deep learning network model in the AI ​​image recognition real-time reasoning scene. When BatchSize=1, the performance is as high as 8600 images/s, which is 40 times the CPU performance. The typical application power consumption of F37X is only 75 watts, and the performance-to-power ratio is as high as 375Gops/W. In addition, F37X has 24GB DDR4 memory onboard and dual-port 100Gbps high-speed network interface. F37X is designed as a full-height half-length PCI-E 3.0 board. The compact size enables a single AI server to support more accelerator card devices, thereby providing extreme computing and communication performance.

The world's first FPGA AI accelerator card F37X with integrated HBM2

F37X can support three mainstream programming language development environments: C/C++, OpenCL and RTL. The corresponding SDx tool suite contains SDAccel™, Vivado® and SDK tools, and uses existing OpenCV, BLAS, Encoder, DNN, CNN and other acceleration libraries to support mainstream deep learning frameworks such as Caffe, TensorFlow, Torch, Theano, etc. Covering typical AI application fields such as machine learning reasoning, video image processing, database analysis, finance, security, etc., providing strong ecological support, with better programming ease, flexible and rapid development and migration of different AI custom algorithm applications, in the software A qualitative leap has been achieved in productivity.

Li Jin, vice president of Inspur Group, said: “AI is reconstructing industrial innovation at an unprecedented speed. AI algorithms will continue to iterate rapidly, and online reasoning will become the main scenario for AI computing. Inspur has been committed to innovating FPGA software and hardware technologies to help customers continue Obtaining leading AI computing competitiveness. Inspur F37X accelerator card will provide global users with fast, customizable, real-time, high-performance, high-density and low-power advanced FPGA solutions to accelerate the online deployment of AI applications."

Freddy Engineer, vice president of data center sales at Xilinx, said: "Inspur is well-known for its product implementation and innovation. Inspur will provide customers with servers equipped with Xilinx accelerator cards. We are proud of this. We are very happy to be a developer of Inspur. Partners, jointly launched the breakthrough product F37X, which provides unprecedented memory bandwidth through HBM2, which will accelerate data analysis, AI, and workloads that require the lowest latency data access."

Inspur is the world's leading AI computing power manufacturer. It is committed to building an agile, efficient and optimized AI infrastructure from four levels: computing platform, management suite, framework optimization, and application acceleration. Inspur helps AI customers achieve an order of magnitude improvement in application performance in voice, image, video, search, and network. According to IDC's "2017 China AI Infrastructure Market Survey Report", Inspur AI server market share reached 57% and ranked first.

Tabbing Copper Strip Machine,Interconnection Copper Strip Machine,Photovoltaic Interconnection Copper Strip Machine,Copper Strip Annealing Machine

Jiangsu Lanhui Intelligent Equipment Technology Co., Ltd , https://www.lanhuisolar.com